Insights and Innovations in Secure GenAI Development

Stay informed with the latest news on GenAI, including insights of deploying GenAI securely, innovative solutions and use cases you can implement, and insights from leading experts in the field.

Photo by Possessed Photography on Unsplash

Retrieval-Augmented Generation (RAG) is widely used in natural language processing, particularly for integrating external knowledge bases. However, it often struggles with poorly structured queries—leading to inaccuracies or missed information.

Agentic RAG addresses these issues by refining queries and improving the accuracy of responses. This guide will walk you through how Agentic RAG can elevate your AI systems—complete with a hands-on coding example.

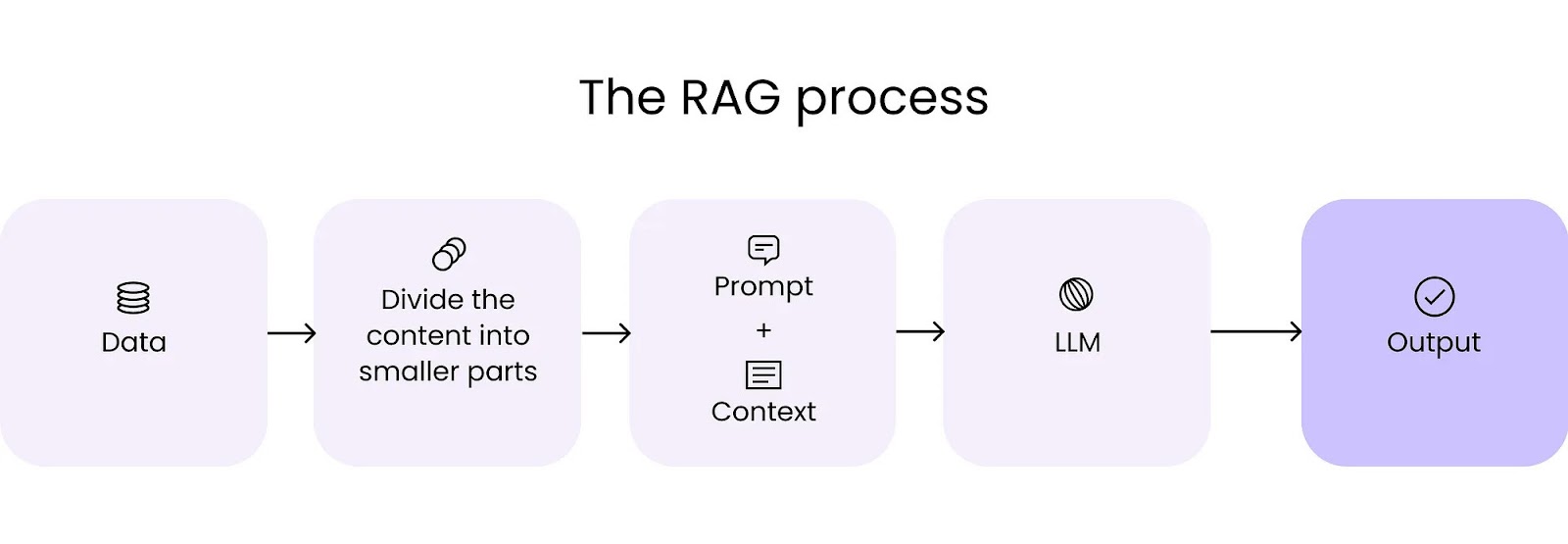

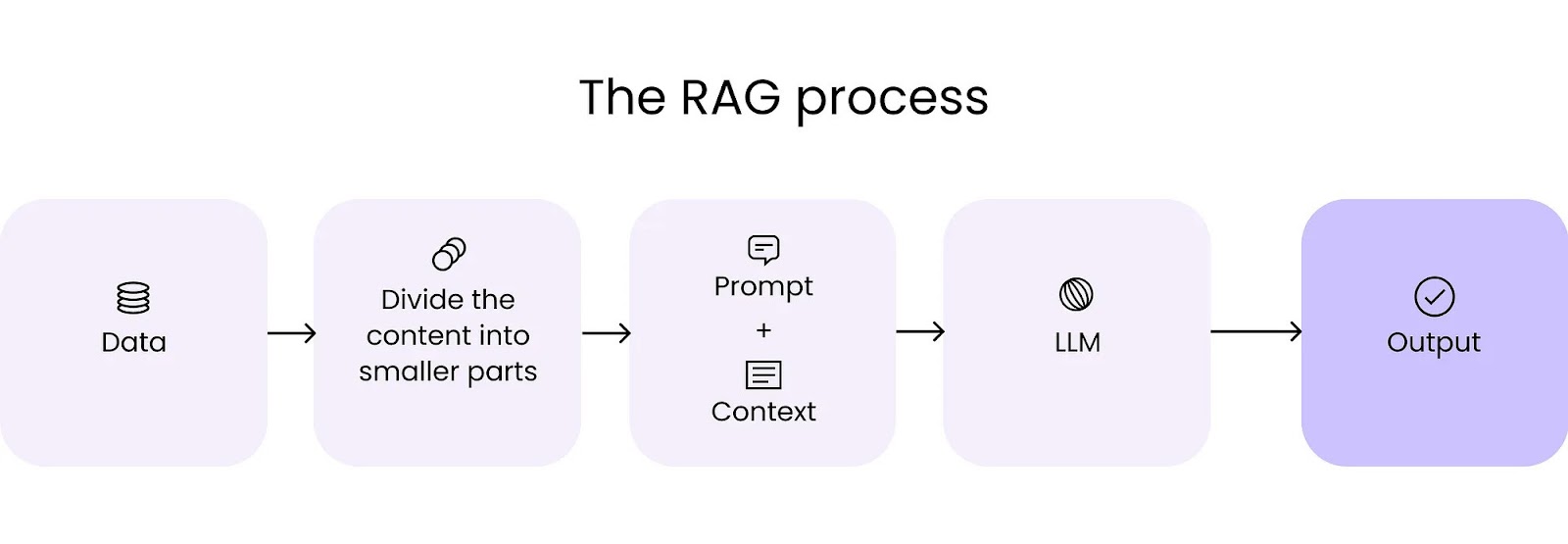

What is RAG?

RAG, or Retrieval-Augmented Generation, is a hybrid approach that combines traditional information retrieval methods with generative language models. Instead of relying solely on a model's internal knowledge, RAG retrieves relevant documents or data from an external knowledge base, using them to generate more accurate and contextually relevant responses.

In a standard RAG setup:

- Retrieval: The system performs a semantic search to find the most relevant pieces of information from a database or knowledge base.

- Augment: The "augmented" aspect refers to the enhancement of the language model's output by integrating real-time, contextually relevant data from the retrieval phase, resulting in more accurate and tailored responses.

- Generation: The retrieved information is then fed into a language model to generate a final response.

This method is useful in scenarios where the language model's internal knowledge might be outdated or insufficient. However, the effectiveness of RAG depends heavily on the quality of the retrieval process. If the search fails to retrieve the right information, the final output may be inaccurate or irrelevant.

The Challenges of Traditional RAG

In a typical RAG setup, a user’s query is processed through a semantic search to retrieve relevant data from a knowledge base. If the query isn't well-structured, the system might return irrelevant results or fail to find important information.

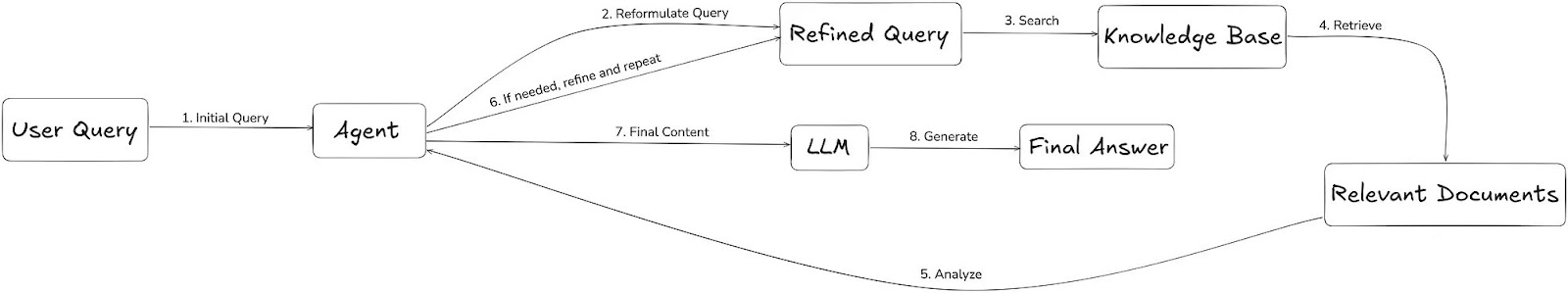

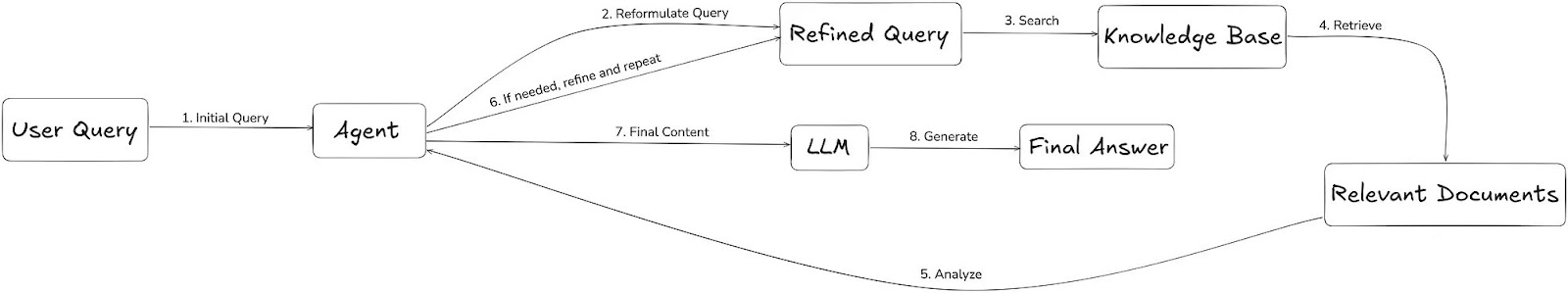

How Agentic RAG Addresses This

Agentic RAG offers a more robust solution by actively refining and re-evaluating queries to ensure higher accuracy and relevance. Here's how it works:

Agentic RAG uses AI agents that:

- Refine the Query: The AI agent refines the user’s query for better clarity and precision.

- Retrieve Information: The refined query is used to search the knowledge base more effectively.

- Re-evaluate Results: The AI agent can assess the retrieved information and refine the search further if needed.

- Generate the Final Response: Once the agent has the right data, it generates the final answer.

This approach reduces errors and ensures more reliable results.

Step-By-Step Guide

Now that you know the theory around Agentic RAG, let’s get practical and build an example. Follow my steps below to learn how to set it up.

Setting Up Your Environment

Before diving into the code, make sure your virtual environment is set up. If not, follow this setup guide.

We'll use HuggingFace's transformers library, though you can explore other alternatives as needed.

Step 1: Install the Required Packages

First, install the necessary libraries:

pip install langchain langchain-openai langchain-community langchain-chroma langchain-huggingface huggingface-hub python-dotenv sentence-transformers "transformers[agents]"

Now you should be able to see these packages inside your venv folder.

Step 2: Set Up Imports

Create a main.py file and add the following imports:

1import os

2import datasets

3from dotenv import load_dotenv

4from transformers import AutoTokenizer

5from langchain.docstore.document import Document

6from langchain.text_splitter import RecursiveCharacterTextSplitter

7from langchain_community.vectorstores import Chroma

8from langchain_huggingface import HuggingFaceEmbeddings

9from tqdm import tqdm

10from transformers.agents import ReactJsonAgent

11from langchain_openai import ChatOpenAI

12import logging

13from RetrieverTool import RetrieverTool

14from OpenAIEngine import OpenAIEngine

15

16if __name__ == "__main__": #This is called when the file is called directly

17 main()

Step 3: Load the Dataset and Prepare the RAG Components

To start, load your dataset. As an example, I’ll use a mental health dataset from Huggingface. You can find it here.

# Load the knowledge base

knowledge_base = datasets.load_dataset("TVRRaviteja/Mental-Health-Data", split="train")

# Convert dataset to Document objects

source_docs = [

Document(page_content=doc["text"]) # Convert dataset into array of Langchain Document

for doc in knowledge_base

Next, set up a tokenizer and define a text splitter:

# Initialize the text splitter

tokenizer = AutoTokenizer.from_pretrained("thenlper/gte-small")

text_splitter = RecursiveCharacterTextSplitter.from_huggingface_tokenizer(

tokenizer,

chunk_size=200,

chunk_overlap=20,

add_start_index=True,

strip_whitespace=True,

separators=["\n\n", "\n", ".", " ", ""],

)

Configure the Tokenizer and Text Splitter

Before generating embeddings, you need to set up a tokenizer and text splitter to process the dataset effectively.

- Load the Pre-trained Tokenizer:some text

- We use a pre-trained tokenizer from the Hugging Face model hub. The thenlper/gte-small model is used to convert text into tokens that the model can understand.

- Set Up the Recursive Character Text Splitter:some text

- The text splitter divides large texts into smaller chunks for processing by the model. Here’s how the arguments customize the splitting behavior:some text

- tokenizer: Measures the size of the text chunks.

- chunk_size=200: Sets each chunk to 200 tokens.

- chunk_overlap=20: Maintains a 20-token overlap between chunks to preserve context.

- add_start_index=True: Adds the start index of each chunk, aligning them with their positions in the source text.

- strip_whitespace=True: Removes leading or trailing whitespace from the chunks.

- separators=["\n\n", "\n", ".", " ", ""]: Specifies the hierarchy of separators, starting with the most significant (e.g., double newline \n\n) down to the least significant (e.g., space " ").

- The text splitter divides large texts into smaller chunks for processing by the model. Here’s how the arguments customize the splitting behavior:some text

Final Steps: Implementing and Running Agentic RAG

Now that you've prepared the tokenizer and text splitter, it's time to generate embeddings and store them in a vector database (such as Chroma). Here's how to proceed:

Initialize the Embedding Model:

You can use HuggingFace’s embedding model to create vector embeddings for your documents.

# Initialize the embedding model

embedding_model = HuggingFaceEmbeddings(model_name="thenlper/gte-small")

# Create the vector database

vectordb = Chroma.from_documents(

documents=docs_processed,

embedding=embedding_model,

persist_directory="chroma"

)

Create the Vector Database

Store the generated embeddings in a vector database, such as Chroma.

Set Up the Retriever Tool

Create a generic retriever tool for all vector stores in Langchain. This tool will handle document retrieval based on semantic similarity.

1from transformers.agents import Tool

2class RetrieverTool(Tool):

3 name = "retriever"

4 description = "Using semantic similarity, retrieves some documents from the knowledge base that have the closest embeddings to the input query."

5 inputs = {

6 "query": {

7 "type": "text",

8 "description": "The query to perform. This should be semantically close to your target documents. Use the affirmative form rather than a question.",

9 }

10 }

11 output_type = "text"

12 def __init__(self, vectordb, **kwargs):

13 super().__init__(**kwargs)

14 self.vectordb = vectordb

15 def forward(self, query: str) -> str:

16 assert isinstance(query, str), "Your search query must be a string"

17

18 docs = self.vectordb.similarity_search(

19 query,

20 k=7,

21 )

22 return "\nRetrieved documents:\n" + "".join(

23 [f"===== Document {str(i)} =====\n" + doc.page_content for i, doc in enumerate(docs)]

24 )

Attributes:

. inputs: Specifies the expected input for the tool, which is a query of type text. The description suggests the query should be in an affirmative form rather than a question.

. output_type = "text": Specifies that the output of the tool will be text.

2. __init__ Method:

The constructor initializes the RetrieverTool with a vectordb (a vector database used for similarity search) and any additional keyword arguments (kwargs).

3. forward Method:

- Purpose: Implements the logic for performing the document retrieval.

- query: The method accepts a query string as input.

- assert isinstance(query, str): Ensures the query is a string.

- self.vectordb.similarity_search(query, k=7): Performs a similarity search on the vector database using the query, retrieving the top 7 most similar documents.

- return "\nRetrieved documents:\n" + "".join(...): Formats the retrieved documents into a string and returns it. The output includes headers like "===== Document 0 =====" for each document, followed by the document's content.

Develop the OpenAI Engine

Set up an engine that uses OpenAI for retrieval and LLM operations. This engine will handle the interaction with the OpenAI API to generate responses.

1import os

2from openai import OpenAI

3from dotenv import load_dotenv

4from transformers.agents.llm_engine import MessageRole, get_clean_message_list

5

6load_dotenv()

7

8openai_role_conversions = {

9 MessageRole.TOOL_RESPONSE: MessageRole.USER,

10}

11

12class OpenAIEngine:

13 def __init__(self, model_name="gpt-4-turbo"):

14 self.model_name = model_name

15 self.client = OpenAI(

16 api_key=os.getenv("OPENAI_API_KEY"),

17 )

18

19 def __call__(self, messages, stop_sequences=[]):

20 messages = get_clean_message_list(messages, role_conversions=openai_role_conversions)

21

22 response = self.client.chat.completions.create(

23 model=self.model_name,

24 messages=messages,

25 stop=stop_sequences,

26 temperature=0.5,

27 )

28 return response.choices[0].message.contentCreate the Agent

Combine the retriever tool and OpenAI engine into an agent using ReactJsonAgent. This agent will process queries, retrieve relevant information, and generate responses.

retriever_tool = RetrieverTool(vectordb)

llm_engine = OpenAIEngine()

# Create the agent

agent = ReactJsonAgent(tools=[retriever_tool], llm_engine=llm_engine, max_iterations=3, verbose=2)Run the Agentic Code

def run_agentic_rag(question: str) -> str:

enhanced_question = f"""Using the information contained in your knowledge base, which you can access with the 'retriever' tool,

give a comprehensive answer to the question below.

Respond only to the question asked, response should be concise and relevant to the question.

If you cannot find information, do not give up and try calling your retriever again with different arguments!

Make sure to have covered the question completely by calling the retriever tool several times with semantically different queries.

Your queries should not be questions but affirmative form sentences: e.g. rather than "How to check personality scores of someone who is open and agreeable?", query should be "find me personality scores of someone who is open and agreeable".

Question:

{question}"""

return agent.run(enhanced_question)

Compare with Standard RAG

Implement a standard RAG method to compare with the Agentic RAG approach. This will help you understand the improvements and benefits of Agentic RAG.

def run_standard_rag(question: str) -> str:

prompt = f"""Given the question and supporting documents below, give a comprehensive answer to the question.

Respond only to the question asked, response should be concise and relevant to the question.

Provide the number of the source document when relevant.

Question:

{question}

"""

messages = [{"role": "user", "content": prompt}]

reader_llm = ChatOpenAI(model="gpt-4-turbo", api_key=os.getenv("OPENAI_API_KEY"))

ai_msg = reader_llm.invoke(messages)

return ai_msg.content

Now, run these functions by making changes in the main function that we wrote in the first step.

def main():

init()

question = """

How can i check my score? If I am procarstinating and but at the same time I have imposter syndrome.

"""

print(f"Question: {question}")

agentic_answer = run_agentic_rag(question)

print("Agentic RAG Answer:")

print(f"Answer: {agentic_answer}")

standard_answer = run_standard_rag(question)

print("\nStandard RAG Answer:")

print(f"Answer: {standard_answer}")

Run the Script

Open your terminal and run the script using the following command:

python main.py

Compare the Outputs

After running the script, compare the outputs from Agentic RAG and standard RAG. Analyze how Agentic RAG refines the query and delivers more accurate and relevant results.

Query: How can I check my score? If I am procrastinating and at the same time I have imposter syndrome.

| Standard RAG Answer | Agentic RAG Answer |

|---|---|

| To check your score, you can refer to Documents 4, 5, and 6, which contain information on Personality Scores. These documents will provide the specific details you need regarding your scores. If procrastination or imposter syndrome is affecting your ability to check your scores, consider setting a specific, manageable goal to review your scores at a designated time, thereby reducing the tendency to delay due to these feelings. |

To check your "score" or assess yourself regarding procrastination and imposter syndrome, you can use

specific psychological self-assessment tools. Unfortunately, specific tools or questionnaires were not

identified in the retrieved documents. However, generally, you can consider the following approaches: 1. Procrastination Assessment: For procrastination, tools like the "Procrastination Assessment Scale for Students (PASS)" or the "Procrastination Inventory" can be used to measure levels of procrastination in various contexts. 2. Imposter Syndrome Assessment: For imposter syndrome, the "Clance Imposter Phenomenon Scale" is a commonly used tool to assess feelings of fraudulence and self-doubt in personal achievement contexts. These tools typically consist of a series of statements where you rate your agreement or frequency, resulting in a score that indicates your level of procrastination or imposter feelings. You can find these assessments online or through psychological services. They offer a structured way to reflect on your behaviors and feelings, providing a "score" that reflects the severity or frequency of the traits in question. |

Note: You can always improve the performance by having a better embedding model with more dimensions and a bigger dataset with better data quality.

Securing Data in RAG and agentic RAG deployments

When implementing RAG, especially in enterprise or sensitive environments, securing the data becomes paramount. Here's how to ensure that your RAG implementation is both effective and secure:

- Ensure proper authorization: Verify that users only access the data they are authorized to see. This means embedding a robust authorization layer within your RAG system that cross-checks user permissions before any data is retrieved or generated.

- Embed permission layers on data: During the retrieval process, embed permissions on the data itself. This ensures that when a similarity search is performed, the system retrieves and generates information based only on what the user is authorized to access. This is particularly critical when dealing with sensitive or regulated data, as it helps prevent unauthorized information disclosure.

- Importance in Agentic RAG: By leveraging agents, we can refine queries to mitigate potential security risks, such as the exposure of Personally Identifiable Information (PII). This approach enables the implementation of an additional security layer before the data access layer. Agents can also dynamically adapt to evolving security requirements, continuously monitoring and adjusting queries to prevent unauthorized data access. This ensures that sensitive information is consistently protected, even as threats and data patterns change.

Conclusion

Agentic RAG tackles the limitations of traditional RAG by refining and improving the accuracy of query responses. By following this guide, you can implement Agentic RAG in your AI systems, ensuring more precise and reliable outputs.

We would love to talk to you about implementing an Agentic RAG infrastructure—while ensuring security and compliance are covered from the get-go.

Please reach out to schedule a time with us, or connect with me on LinkedIn and explore the full code on GitHub.

Improving Retrieval-Augmented Generation with Agentic RAG: A Step-By-Step Guide for AI Leaders [With Example Code]

Photo by Possessed Photography on Unsplash

Retrieval-Augmented Generation (RAG) is widely used in natural language processing, particularly for integrating external knowledge bases. However, it often struggles with poorly structured queries—leading to inaccuracies or missed information.

Agentic RAG addresses these issues by refining queries and improving the accuracy of responses. This guide will walk you through how Agentic RAG can elevate your AI systems—complete with a hands-on coding example.

What is RAG?

RAG, or Retrieval-Augmented Generation, is a hybrid approach that combines traditional information retrieval methods with generative language models. Instead of relying solely on a model's internal knowledge, RAG retrieves relevant documents or data from an external knowledge base, using them to generate more accurate and contextually relevant responses.

In a standard RAG setup:

- Retrieval: The system performs a semantic search to find the most relevant pieces of information from a database or knowledge base.

- Augment: The "augmented" aspect refers to the enhancement of the language model's output by integrating real-time, contextually relevant data from the retrieval phase, resulting in more accurate and tailored responses.

- Generation: The retrieved information is then fed into a language model to generate a final response.

This method is useful in scenarios where the language model's internal knowledge might be outdated or insufficient. However, the effectiveness of RAG depends heavily on the quality of the retrieval process. If the search fails to retrieve the right information, the final output may be inaccurate or irrelevant.

The Challenges of Traditional RAG

In a typical RAG setup, a user’s query is processed through a semantic search to retrieve relevant data from a knowledge base. If the query isn't well-structured, the system might return irrelevant results or fail to find important information.

How Agentic RAG Addresses This

Agentic RAG offers a more robust solution by actively refining and re-evaluating queries to ensure higher accuracy and relevance. Here's how it works:

Agentic RAG uses AI agents that:

- Refine the Query: The AI agent refines the user’s query for better clarity and precision.

- Retrieve Information: The refined query is used to search the knowledge base more effectively.

- Re-evaluate Results: The AI agent can assess the retrieved information and refine the search further if needed.

- Generate the Final Response: Once the agent has the right data, it generates the final answer.

This approach reduces errors and ensures more reliable results.

Step-By-Step Guide

Now that you know the theory around Agentic RAG, let’s get practical and build an example. Follow my steps below to learn how to set it up.

Setting Up Your Environment

Before diving into the code, make sure your virtual environment is set up. If not, follow this setup guide.

We'll use HuggingFace's transformers library, though you can explore other alternatives as needed.

Step 1: Install the Required Packages

First, install the necessary libraries:

pip install langchain langchain-openai langchain-community langchain-chroma langchain-huggingface huggingface-hub python-dotenv sentence-transformers "transformers[agents]"

Now you should be able to see these packages inside your venv folder.

Step 2: Set Up Imports

Create a main.py file and add the following imports:

1import os

2import datasets

3from dotenv import load_dotenv

4from transformers import AutoTokenizer

5from langchain.docstore.document import Document

6from langchain.text_splitter import RecursiveCharacterTextSplitter

7from langchain_community.vectorstores import Chroma

8from langchain_huggingface import HuggingFaceEmbeddings

9from tqdm import tqdm

10from transformers.agents import ReactJsonAgent

11from langchain_openai import ChatOpenAI

12import logging

13from RetrieverTool import RetrieverTool

14from OpenAIEngine import OpenAIEngine

15

16if __name__ == "__main__": #This is called when the file is called directly

17 main()

Step 3: Load the Dataset and Prepare the RAG Components

To start, load your dataset. As an example, I’ll use a mental health dataset from Huggingface. You can find it here.

# Load the knowledge base

knowledge_base = datasets.load_dataset("TVRRaviteja/Mental-Health-Data", split="train")

# Convert dataset to Document objects

source_docs = [

Document(page_content=doc["text"]) # Convert dataset into array of Langchain Document

for doc in knowledge_base

Next, set up a tokenizer and define a text splitter:

# Initialize the text splitter

tokenizer = AutoTokenizer.from_pretrained("thenlper/gte-small")

text_splitter = RecursiveCharacterTextSplitter.from_huggingface_tokenizer(

tokenizer,

chunk_size=200,

chunk_overlap=20,

add_start_index=True,

strip_whitespace=True,

separators=["\n\n", "\n", ".", " ", ""],

)

Configure the Tokenizer and Text Splitter

Before generating embeddings, you need to set up a tokenizer and text splitter to process the dataset effectively.

- Load the Pre-trained Tokenizer:some text

- We use a pre-trained tokenizer from the Hugging Face model hub. The thenlper/gte-small model is used to convert text into tokens that the model can understand.

- Set Up the Recursive Character Text Splitter:some text

- The text splitter divides large texts into smaller chunks for processing by the model. Here’s how the arguments customize the splitting behavior:some text

- tokenizer: Measures the size of the text chunks.

- chunk_size=200: Sets each chunk to 200 tokens.

- chunk_overlap=20: Maintains a 20-token overlap between chunks to preserve context.

- add_start_index=True: Adds the start index of each chunk, aligning them with their positions in the source text.

- strip_whitespace=True: Removes leading or trailing whitespace from the chunks.

- separators=["\n\n", "\n", ".", " ", ""]: Specifies the hierarchy of separators, starting with the most significant (e.g., double newline \n\n) down to the least significant (e.g., space " ").

- The text splitter divides large texts into smaller chunks for processing by the model. Here’s how the arguments customize the splitting behavior:some text

Final Steps: Implementing and Running Agentic RAG

Now that you've prepared the tokenizer and text splitter, it's time to generate embeddings and store them in a vector database (such as Chroma). Here's how to proceed:

Initialize the Embedding Model:

You can use HuggingFace’s embedding model to create vector embeddings for your documents.

# Initialize the embedding model

embedding_model = HuggingFaceEmbeddings(model_name="thenlper/gte-small")

# Create the vector database

vectordb = Chroma.from_documents(

documents=docs_processed,

embedding=embedding_model,

persist_directory="chroma"

)

Create the Vector Database

Store the generated embeddings in a vector database, such as Chroma.

Set Up the Retriever Tool

Create a generic retriever tool for all vector stores in Langchain. This tool will handle document retrieval based on semantic similarity.

1from transformers.agents import Tool

2class RetrieverTool(Tool):

3 name = "retriever"

4 description = "Using semantic similarity, retrieves some documents from the knowledge base that have the closest embeddings to the input query."

5 inputs = {

6 "query": {

7 "type": "text",

8 "description": "The query to perform. This should be semantically close to your target documents. Use the affirmative form rather than a question.",

9 }

10 }

11 output_type = "text"

12 def __init__(self, vectordb, **kwargs):

13 super().__init__(**kwargs)

14 self.vectordb = vectordb

15 def forward(self, query: str) -> str:

16 assert isinstance(query, str), "Your search query must be a string"

17

18 docs = self.vectordb.similarity_search(

19 query,

20 k=7,

21 )

22 return "\nRetrieved documents:\n" + "".join(

23 [f"===== Document {str(i)} =====\n" + doc.page_content for i, doc in enumerate(docs)]

24 )

Attributes:

. inputs: Specifies the expected input for the tool, which is a query of type text. The description suggests the query should be in an affirmative form rather than a question.

. output_type = "text": Specifies that the output of the tool will be text.

2. __init__ Method:

The constructor initializes the RetrieverTool with a vectordb (a vector database used for similarity search) and any additional keyword arguments (kwargs).

3. forward Method:

- Purpose: Implements the logic for performing the document retrieval.

- query: The method accepts a query string as input.

- assert isinstance(query, str): Ensures the query is a string.

- self.vectordb.similarity_search(query, k=7): Performs a similarity search on the vector database using the query, retrieving the top 7 most similar documents.

- return "\nRetrieved documents:\n" + "".join(...): Formats the retrieved documents into a string and returns it. The output includes headers like "===== Document 0 =====" for each document, followed by the document's content.

Develop the OpenAI Engine

Set up an engine that uses OpenAI for retrieval and LLM operations. This engine will handle the interaction with the OpenAI API to generate responses.

1import os

2from openai import OpenAI

3from dotenv import load_dotenv

4from transformers.agents.llm_engine import MessageRole, get_clean_message_list

5

6load_dotenv()

7

8openai_role_conversions = {

9 MessageRole.TOOL_RESPONSE: MessageRole.USER,

10}

11

12class OpenAIEngine:

13 def __init__(self, model_name="gpt-4-turbo"):

14 self.model_name = model_name

15 self.client = OpenAI(

16 api_key=os.getenv("OPENAI_API_KEY"),

17 )

18

19 def __call__(self, messages, stop_sequences=[]):

20 messages = get_clean_message_list(messages, role_conversions=openai_role_conversions)

21

22 response = self.client.chat.completions.create(

23 model=self.model_name,

24 messages=messages,

25 stop=stop_sequences,

26 temperature=0.5,

27 )

28 return response.choices[0].message.contentCreate the Agent

Combine the retriever tool and OpenAI engine into an agent using ReactJsonAgent. This agent will process queries, retrieve relevant information, and generate responses.

retriever_tool = RetrieverTool(vectordb)

llm_engine = OpenAIEngine()

# Create the agent

agent = ReactJsonAgent(tools=[retriever_tool], llm_engine=llm_engine, max_iterations=3, verbose=2)Run the Agentic Code

def run_agentic_rag(question: str) -> str:

enhanced_question = f"""Using the information contained in your knowledge base, which you can access with the 'retriever' tool,

give a comprehensive answer to the question below.

Respond only to the question asked, response should be concise and relevant to the question.

If you cannot find information, do not give up and try calling your retriever again with different arguments!

Make sure to have covered the question completely by calling the retriever tool several times with semantically different queries.

Your queries should not be questions but affirmative form sentences: e.g. rather than "How to check personality scores of someone who is open and agreeable?", query should be "find me personality scores of someone who is open and agreeable".

Question:

{question}"""

return agent.run(enhanced_question)

Compare with Standard RAG

Implement a standard RAG method to compare with the Agentic RAG approach. This will help you understand the improvements and benefits of Agentic RAG.

def run_standard_rag(question: str) -> str:

prompt = f"""Given the question and supporting documents below, give a comprehensive answer to the question.

Respond only to the question asked, response should be concise and relevant to the question.

Provide the number of the source document when relevant.

Question:

{question}

"""

messages = [{"role": "user", "content": prompt}]

reader_llm = ChatOpenAI(model="gpt-4-turbo", api_key=os.getenv("OPENAI_API_KEY"))

ai_msg = reader_llm.invoke(messages)

return ai_msg.content

Now, run these functions by making changes in the main function that we wrote in the first step.

def main():

init()

question = """

How can i check my score? If I am procarstinating and but at the same time I have imposter syndrome.

"""

print(f"Question: {question}")

agentic_answer = run_agentic_rag(question)

print("Agentic RAG Answer:")

print(f"Answer: {agentic_answer}")

standard_answer = run_standard_rag(question)

print("\nStandard RAG Answer:")

print(f"Answer: {standard_answer}")

Run the Script

Open your terminal and run the script using the following command:

python main.py

Compare the Outputs

After running the script, compare the outputs from Agentic RAG and standard RAG. Analyze how Agentic RAG refines the query and delivers more accurate and relevant results.

Query: How can I check my score? If I am procrastinating and at the same time I have imposter syndrome.

| Standard RAG Answer | Agentic RAG Answer |

|---|---|

| To check your score, you can refer to Documents 4, 5, and 6, which contain information on Personality Scores. These documents will provide the specific details you need regarding your scores. If procrastination or imposter syndrome is affecting your ability to check your scores, consider setting a specific, manageable goal to review your scores at a designated time, thereby reducing the tendency to delay due to these feelings. |

To check your "score" or assess yourself regarding procrastination and imposter syndrome, you can use

specific psychological self-assessment tools. Unfortunately, specific tools or questionnaires were not

identified in the retrieved documents. However, generally, you can consider the following approaches: 1. Procrastination Assessment: For procrastination, tools like the "Procrastination Assessment Scale for Students (PASS)" or the "Procrastination Inventory" can be used to measure levels of procrastination in various contexts. 2. Imposter Syndrome Assessment: For imposter syndrome, the "Clance Imposter Phenomenon Scale" is a commonly used tool to assess feelings of fraudulence and self-doubt in personal achievement contexts. These tools typically consist of a series of statements where you rate your agreement or frequency, resulting in a score that indicates your level of procrastination or imposter feelings. You can find these assessments online or through psychological services. They offer a structured way to reflect on your behaviors and feelings, providing a "score" that reflects the severity or frequency of the traits in question. |

Note: You can always improve the performance by having a better embedding model with more dimensions and a bigger dataset with better data quality.

Securing Data in RAG and agentic RAG deployments

When implementing RAG, especially in enterprise or sensitive environments, securing the data becomes paramount. Here's how to ensure that your RAG implementation is both effective and secure:

- Ensure proper authorization: Verify that users only access the data they are authorized to see. This means embedding a robust authorization layer within your RAG system that cross-checks user permissions before any data is retrieved or generated.

- Embed permission layers on data: During the retrieval process, embed permissions on the data itself. This ensures that when a similarity search is performed, the system retrieves and generates information based only on what the user is authorized to access. This is particularly critical when dealing with sensitive or regulated data, as it helps prevent unauthorized information disclosure.

- Importance in Agentic RAG: By leveraging agents, we can refine queries to mitigate potential security risks, such as the exposure of Personally Identifiable Information (PII). This approach enables the implementation of an additional security layer before the data access layer. Agents can also dynamically adapt to evolving security requirements, continuously monitoring and adjusting queries to prevent unauthorized data access. This ensures that sensitive information is consistently protected, even as threats and data patterns change.

Conclusion

Agentic RAG tackles the limitations of traditional RAG by refining and improving the accuracy of query responses. By following this guide, you can implement Agentic RAG in your AI systems, ensuring more precise and reliable outputs.

We would love to talk to you about implementing an Agentic RAG infrastructure—while ensuring security and compliance are covered from the get-go.

Please reach out to schedule a time with us, or connect with me on LinkedIn and explore the full code on GitHub.

Introduction

Generative Artificial intelligence (GenAI) is a powerful force that’s truly changing the way we do things—especially in financial services. From making operations smoother to ensuring strict compliance, GenAI is driving a whole new way of working in finance.

As the Global Head of Strategy and Innovation at CriticalRiver and an advisor to Opsin, I’ve had the chance to see these changes firsthand. My focus is on pushing strategic initiatives and sparking innovation, always looking for how new technologies can improve the way we operate and bring real value to our clients.

So, how exactly is GenAI changing the financial services industry? In this article, I’ll dive into my knowledge around the subject. As someone who has been deploying financial copilots at Fortune 500 Companies for the last several months, I see the ways GenAI is revolutionizing accounting, the challenges we’re facing, key use cases, security concerns, and the benefits that GenAI brings to these sectors.

Revolutionizing financial services with GenAI

GenAI is transforming the financial services industry in ways we couldn't have imagined before. By processing huge amounts of data and making real-time decisions, GenAI helps financial institutions work more efficiently and stay compliant with regulations. It automates routine tasks and provides deeper insights through advanced analytics. This technological shift allows financial organizations to run more smoothly, meet regulatory standards, and better serve their clients' ever-changing needs.

The challenges in transforming financial services and accounting

Transforming financial services and accounting involves overcoming several significant challenges. These obstacles can impede progress and require innovative solutions:

- Security and compliance: Ensuring data security and regulatory compliance is a constant challenge for financial institutions. Protecting sensitive information while adhering to stringent regulations demands robust security measures and vigilant monitoring.

- Time-consuming operations: Many data operations in the financial sector are labor-intensive and slow, making it difficult for organizations to respond quickly to changing conditions.

- Manual data collection: Gathering data from various sources manually is inefficient and prone to errors.

- Complex systems: Navigating multiple platforms, such as ERP systems, can be overwhelming and hinder seamless operations.

- Multiple systems for budgeting and forecasting: Using different systems for budgeting and forecasting complicates processes and increases the risk of errors.

- Manual reporting: Generating daily, reconciliation, and flux reports manually is tedious and time-consuming.

Benefits of GenAI in automating financial and accounting tasks

GenAI automation offers several key benefits, making it a smart investment for financial institutions:

- Increased efficiency: GenAI cuts down on manual tasks, freeing up employees for more important work. Automating processes makes operations faster and smoother.

- Enhanced accuracy: GenAI reduces human errors, ensuring accurate data. This leads to better financial reports and decisions.

- Improved decisions: GenAI provides real-time insights, helping organizations make smarter financial choices. Advanced analytics enable better planning.

- Cost optimization: GenAI reduces staffing and operational costs, boosting profitability.

- Improved risk management: GenAI spots anomalies and potential fraud, strengthening risk management.

- Better customer experience: GenAI speeds up payments and responses to inquiries, keeping customers happy.

Let’s take a look at an example.

Imagine a company that processes thousands of invoices by hand each month.

By switching to an GenAI-driven invoice processing system, the company can automate the entire process—from data extraction to approvals. This change boosts efficiency and accuracy, helps make better decisions, saves costs, improves risk management, and creates a better experience for customers.

Key use cases

GenAI has numerous applications in financial services and accounting, offering transformative potential across various functions. Here are some key use cases:

Accounting

GenAI can streamline and improve several accounting processes, including:

- Reconciliation: Automatically matching transactions to ensure data accuracy, reducing the time and effort required for manual reconciliation.

- Flux analysis: Identifying and explaining variances in financial data, providing deeper insights into financial performance.

- Consolidation: Combining financial statements from different entities, streamlining the consolidation process.

- Reporting: Generating accurate and timely financial reports, improving decision-making.

- Advanced analytics: Using data to gain deeper insights and identify trends, helping organizations make more informed decisions.

- Forecasting: Predicting future financial performance, allowing organizations to plan more effectively.

- Workflow automation: Streamlining processes to save time and reduce errors.

Example Use Case: Manual Reporting

Let’s consider a common challenge that many financial institutions face: manual reporting. Today, financial teams often spend countless hours gathering data from various sources, consolidating it into reports, and ensuring that everything complies with regulatory standards. This process is incredibly time consuming and prone to human error—leading to potential inaccuracies, time wasted correcting errors, and compliance risks.

For example, imagine a company that needs to generate daily financial reports. Currently, the team might be manually pulling data from multiple systems, cross-referencing it, and then compiling it into a final report. This process could take several hours each day, involving repetitive tasks that are both tedious and error-prone. If discrepancies are found, the team must spend additional time identifying and correcting them, further delaying the reporting process.

Here is a before-and-after to see how GenAI can transform this process:

| Aspect | Before (Manual Reporting) | After (AI-Driven Reporting) |

|---|---|---|

| Data Integration | Manually gathering data from multiple sources | Automatic integration and consolidation of data |

| Time Required | Several hours each day | Completed in minutes, 10x faster |

| Accuracy | Prone to human error, with frequent need for additional checks | Real-time validation, minimizing errors |

| Compliance | Manual oversight required to meet regulatory standards | Automated compliance checks ensuring adherence |

| Risk Management | High risk of errors leading to potential compliance issues | Proactive risk management with automated discrepancy detection |

| Employee Focus | Employees stuck on repetitive, time-consuming tasks | Employees freed up to focus on strategic analysis |

| Cost Efficiency | Higher labor costs due to extensive manual work | Reduced costs, allowing resources to be used more strategically |

| Final Output | Reports often delayed and potentially inaccurate | Fast, accurate, and reliable reports |

| Customer Experience | Delays and errors can impact decision-making and service delivery | Better decision-making with timely, accurate reporting |

This before-and-after comparison clearly illustrates how GenAI is transforming the reporting process—making it faster, more accurate, and more efficient— while also benefiting overall business performance.

Considerations for implementing GenAI in financial services and accounting

We’ve reviewed the benefits and challenges and a few examples, but successful implementation of GenAI requires more than just adopting the latest technology. It demands a thoughtful strategy that aligns with your organization’s goals, addresses potential risks, and takes into account the complex landscape of financial operations.

GenAI isn’t a one-size-fits-all solution. To truly benefit from its capabilities, organizations need to carefully plan their approach, balancing innovation with caution. The right strategy can help you streamline operations and also unlock new opportunities for growth and competitive advantage.

So, how should organizations approach implementing GenAI in financial services and accounting?

It starts with a strategic approach that balances both defensive and offensive strategies to fully leverage GenAI’s benefits while managing potential risks. Here’s how to get started:

- Start with key business questions: Identify the primary challenges and opportunities where GenAI can make the most impact. Understanding the specific needs and goals of the organization is crucial for successful GenAI implementation.

- Experiment and scale: Begin with pilot projects to test GenAI solutions. Learn from these experiments and scale up successful initiatives. This iterative approach allows organizations to refine their strategies and maximize the value of GenAI.

- Create a flywheel effect: Use initial successes to drive momentum and continuous improvement across the organization. As GenAI solutions demonstrate their value, they generate further support and investment, creating a virtuous cycle of innovation and growth.

Security solutions for enabling financial transformation

While the benefits of GenAI are impressive, securing these systems is just as important. Ensuring compliance with financial regulations from the start is essential to protect sensitive data and maintain trust. Here are some key security measures to consider in the planning phase:

Strict access control

Ensuring that only authorized personnel can access sensitive financial data is fundamental. Implementing strict access controls protects data and reduces the risk of unauthorized access.

Compliance checks and validation

Regularly verify that GenAI models do not introduce significant risks. Compliance checks and validation ensure that GenAI solutions adhere to regulatory requirements and maintain data integrity.

Ongoing monitoring and automated audits

Continuous monitoring of GenAI systems and conducting automated audits help maintain security and compliance.

Improved data security and privacy

Protecting sensitive information through robust security measures—including encryption and anonymization—is essential. Enhancing data security and privacy safeguards against breaches and maintains client trust.

Other important security steps include guarding access, enforcing policies, managing risks seamlessly, improving reporting, and securely storing data.

Balancing GenAI usage as its value rises

GenAI offers a lot of benefits, but it also comes with data privacy concerns. To address these, organizations need to:

- Implement multi-layered security: Use role-based access control and least privilege principles to protect sensitive information. This approach ensures that only authorized personnel can access data.

- Ensure robust encryption: Safeguard data with encryption and anonymization techniques. These measures protect sensitive information from unauthorized access and breaches.

- Conduct regular audits: Schedule vulnerability scans and audits to identify and address potential risks. Regular assessments help maintain the security and effectiveness of GenAI systems.

Conclusion

Generative GenAI is reshaping the financial services industry, offering huge benefits in automating tasks, improving stakeholder interactions, and staying compliant. But as data grows, strong security measures are more important than ever.

Security isn’t optional—it’s a must. Protecting sensitive information and managing risks are key to making the most of GenAI. As financial institutions embrace GenAI, they need to build security into their strategies to stay competitive and maintain trust.

Now’s the time to adopt GenAI, but do it with security at the core. This approach will help you keep up with the fast-paced market while protecting your operations.

The Future of finance: How GenAI is Transforming The Industry

Introduction

Generative Artificial intelligence (GenAI) is a powerful force that’s truly changing the way we do things—especially in financial services. From making operations smoother to ensuring strict compliance, GenAI is driving a whole new way of working in finance.

As the Global Head of Strategy and Innovation at CriticalRiver and an advisor to Opsin, I’ve had the chance to see these changes firsthand. My focus is on pushing strategic initiatives and sparking innovation, always looking for how new technologies can improve the way we operate and bring real value to our clients.

So, how exactly is GenAI changing the financial services industry? In this article, I’ll dive into my knowledge around the subject. As someone who has been deploying financial copilots at Fortune 500 Companies for the last several months, I see the ways GenAI is revolutionizing accounting, the challenges we’re facing, key use cases, security concerns, and the benefits that GenAI brings to these sectors.

Revolutionizing financial services with GenAI

GenAI is transforming the financial services industry in ways we couldn't have imagined before. By processing huge amounts of data and making real-time decisions, GenAI helps financial institutions work more efficiently and stay compliant with regulations. It automates routine tasks and provides deeper insights through advanced analytics. This technological shift allows financial organizations to run more smoothly, meet regulatory standards, and better serve their clients' ever-changing needs.

The challenges in transforming financial services and accounting

Transforming financial services and accounting involves overcoming several significant challenges. These obstacles can impede progress and require innovative solutions:

- Security and compliance: Ensuring data security and regulatory compliance is a constant challenge for financial institutions. Protecting sensitive information while adhering to stringent regulations demands robust security measures and vigilant monitoring.

- Time-consuming operations: Many data operations in the financial sector are labor-intensive and slow, making it difficult for organizations to respond quickly to changing conditions.

- Manual data collection: Gathering data from various sources manually is inefficient and prone to errors.

- Complex systems: Navigating multiple platforms, such as ERP systems, can be overwhelming and hinder seamless operations.

- Multiple systems for budgeting and forecasting: Using different systems for budgeting and forecasting complicates processes and increases the risk of errors.

- Manual reporting: Generating daily, reconciliation, and flux reports manually is tedious and time-consuming.

Benefits of GenAI in automating financial and accounting tasks

GenAI automation offers several key benefits, making it a smart investment for financial institutions:

- Increased efficiency: GenAI cuts down on manual tasks, freeing up employees for more important work. Automating processes makes operations faster and smoother.

- Enhanced accuracy: GenAI reduces human errors, ensuring accurate data. This leads to better financial reports and decisions.

- Improved decisions: GenAI provides real-time insights, helping organizations make smarter financial choices. Advanced analytics enable better planning.

- Cost optimization: GenAI reduces staffing and operational costs, boosting profitability.

- Improved risk management: GenAI spots anomalies and potential fraud, strengthening risk management.

- Better customer experience: GenAI speeds up payments and responses to inquiries, keeping customers happy.

Let’s take a look at an example.

Imagine a company that processes thousands of invoices by hand each month.

By switching to an GenAI-driven invoice processing system, the company can automate the entire process—from data extraction to approvals. This change boosts efficiency and accuracy, helps make better decisions, saves costs, improves risk management, and creates a better experience for customers.

Key use cases

GenAI has numerous applications in financial services and accounting, offering transformative potential across various functions. Here are some key use cases:

Accounting

GenAI can streamline and improve several accounting processes, including:

- Reconciliation: Automatically matching transactions to ensure data accuracy, reducing the time and effort required for manual reconciliation.

- Flux analysis: Identifying and explaining variances in financial data, providing deeper insights into financial performance.

- Consolidation: Combining financial statements from different entities, streamlining the consolidation process.

- Reporting: Generating accurate and timely financial reports, improving decision-making.

- Advanced analytics: Using data to gain deeper insights and identify trends, helping organizations make more informed decisions.

- Forecasting: Predicting future financial performance, allowing organizations to plan more effectively.

- Workflow automation: Streamlining processes to save time and reduce errors.

Example Use Case: Manual Reporting

Let’s consider a common challenge that many financial institutions face: manual reporting. Today, financial teams often spend countless hours gathering data from various sources, consolidating it into reports, and ensuring that everything complies with regulatory standards. This process is incredibly time consuming and prone to human error—leading to potential inaccuracies, time wasted correcting errors, and compliance risks.

For example, imagine a company that needs to generate daily financial reports. Currently, the team might be manually pulling data from multiple systems, cross-referencing it, and then compiling it into a final report. This process could take several hours each day, involving repetitive tasks that are both tedious and error-prone. If discrepancies are found, the team must spend additional time identifying and correcting them, further delaying the reporting process.

Here is a before-and-after to see how GenAI can transform this process:

| Aspect | Before (Manual Reporting) | After (AI-Driven Reporting) |

|---|---|---|

| Data Integration | Manually gathering data from multiple sources | Automatic integration and consolidation of data |

| Time Required | Several hours each day | Completed in minutes, 10x faster |

| Accuracy | Prone to human error, with frequent need for additional checks | Real-time validation, minimizing errors |

| Compliance | Manual oversight required to meet regulatory standards | Automated compliance checks ensuring adherence |

| Risk Management | High risk of errors leading to potential compliance issues | Proactive risk management with automated discrepancy detection |

| Employee Focus | Employees stuck on repetitive, time-consuming tasks | Employees freed up to focus on strategic analysis |

| Cost Efficiency | Higher labor costs due to extensive manual work | Reduced costs, allowing resources to be used more strategically |

| Final Output | Reports often delayed and potentially inaccurate | Fast, accurate, and reliable reports |

| Customer Experience | Delays and errors can impact decision-making and service delivery | Better decision-making with timely, accurate reporting |

This before-and-after comparison clearly illustrates how GenAI is transforming the reporting process—making it faster, more accurate, and more efficient— while also benefiting overall business performance.

Considerations for implementing GenAI in financial services and accounting

We’ve reviewed the benefits and challenges and a few examples, but successful implementation of GenAI requires more than just adopting the latest technology. It demands a thoughtful strategy that aligns with your organization’s goals, addresses potential risks, and takes into account the complex landscape of financial operations.

GenAI isn’t a one-size-fits-all solution. To truly benefit from its capabilities, organizations need to carefully plan their approach, balancing innovation with caution. The right strategy can help you streamline operations and also unlock new opportunities for growth and competitive advantage.

So, how should organizations approach implementing GenAI in financial services and accounting?

It starts with a strategic approach that balances both defensive and offensive strategies to fully leverage GenAI’s benefits while managing potential risks. Here’s how to get started:

- Start with key business questions: Identify the primary challenges and opportunities where GenAI can make the most impact. Understanding the specific needs and goals of the organization is crucial for successful GenAI implementation.

- Experiment and scale: Begin with pilot projects to test GenAI solutions. Learn from these experiments and scale up successful initiatives. This iterative approach allows organizations to refine their strategies and maximize the value of GenAI.

- Create a flywheel effect: Use initial successes to drive momentum and continuous improvement across the organization. As GenAI solutions demonstrate their value, they generate further support and investment, creating a virtuous cycle of innovation and growth.

Security solutions for enabling financial transformation

While the benefits of GenAI are impressive, securing these systems is just as important. Ensuring compliance with financial regulations from the start is essential to protect sensitive data and maintain trust. Here are some key security measures to consider in the planning phase:

Strict access control

Ensuring that only authorized personnel can access sensitive financial data is fundamental. Implementing strict access controls protects data and reduces the risk of unauthorized access.

Compliance checks and validation

Regularly verify that GenAI models do not introduce significant risks. Compliance checks and validation ensure that GenAI solutions adhere to regulatory requirements and maintain data integrity.

Ongoing monitoring and automated audits

Continuous monitoring of GenAI systems and conducting automated audits help maintain security and compliance.

Improved data security and privacy

Protecting sensitive information through robust security measures—including encryption and anonymization—is essential. Enhancing data security and privacy safeguards against breaches and maintains client trust.

Other important security steps include guarding access, enforcing policies, managing risks seamlessly, improving reporting, and securely storing data.

Balancing GenAI usage as its value rises

GenAI offers a lot of benefits, but it also comes with data privacy concerns. To address these, organizations need to:

- Implement multi-layered security: Use role-based access control and least privilege principles to protect sensitive information. This approach ensures that only authorized personnel can access data.

- Ensure robust encryption: Safeguard data with encryption and anonymization techniques. These measures protect sensitive information from unauthorized access and breaches.

- Conduct regular audits: Schedule vulnerability scans and audits to identify and address potential risks. Regular assessments help maintain the security and effectiveness of GenAI systems.

Conclusion

Generative GenAI is reshaping the financial services industry, offering huge benefits in automating tasks, improving stakeholder interactions, and staying compliant. But as data grows, strong security measures are more important than ever.

Security isn’t optional—it’s a must. Protecting sensitive information and managing risks are key to making the most of GenAI. As financial institutions embrace GenAI, they need to build security into their strategies to stay competitive and maintain trust.

Now’s the time to adopt GenAI, but do it with security at the core. This approach will help you keep up with the fast-paced market while protecting your operations.

As Heads of AI and VPs of Engineering explore generative AI (GenAI), they encounter a "chicken and egg" problem: the various benefits and capabilities of GenAI are juxtaposed against obstacles like performance limitations, high costs, stringent security requirements, complex legal frameworks, and rigorous compliance mandates.

With the adoption of GenAI, leaders face a fundamental question: Does the promise of GenAI outweigh the complexities of its implementation?

The Innovation Dilemma

Gen AI is one of the most exciting developments to emerge in the tech industry in the last few decades, because it has the potential to drastically shift how businesses operate. From operational efficiency to expansion capabilities, it’s going to change how we approach and solve problems across every sector.

Here are some examples that illustrate the transformative capabilities of GenAI:

| Application Area | How GenAI Transforms It | Business Impact |

|---|---|---|

| Customer Support | GenAI can analyze past interactions and customer preferences in real-time, personalizing interactions. |

• Reduces workload for support agents by hours • Increased sales thanks to personalized recommendations • Longtail increase of customer satisfaction and loyalty • Cuts operational costs |

| Predictive Maintenance | GenAI can predict equipment failures by analyzing sensor data, allowing proactive maintenance strategies. |

• Reduces downtime due to unexpected maintenance needs • Lowers maintenance costs • Extends equipment lifespan • Improves production efficiency and safety |

The competitive advantages are substantial. But with such promising innovation at our fingertips, why the dilemma?

Despite the capabilities of GenAI, there are three major challenges putting a pause on widespread adoption:

- Performance issues: Slow and inefficient performance can deter organizations from changing established workflows. Does the practicality of integrating new technologies outweigh the potential benefits?

- High costs: The investment required to implement GenAI makes the return on investment (ROI) uncertain, especially in scenarios where the end value is not clearly visible.

- Security and compliance risks: Stringent requirements and potential risks create barriers to experimentation and operational deployment, particularly with the need for secure and compliant data handling.

Case Study: Seeing it in Action

Let's take a look at a real-world example. Imagine a technology company wants to improve its financial reporting with a new application called the Financial Co-Pilot. This tool aims to reduce the time financial analysts spend on reports—from weeks to minutes—freeing up many hours for the analysts to work on more strategic asks that move the business forward.

Here are the questions that leaders might consider when weighing the investment.

- Performance: Does the app perform fast enough to meet analysts' expectations without causing delays or errors that could undermine trust in the tool?

- Accuracy: does the app help the analyst get meaningful data that is accurate and helpful to achieve their task faster?

- Return on investment: Does the business impact—such as speedier reporting and increased efficiency—justify the investment required for development and ongoing maintenance?

- Data security: Given the sensitivity of the financial data involved, can the company ensure robust security measures are in place to protect this data from potential leaks or breaches?

Now you see the "chicken and egg" problem in action. If the financial co-pilot application is to revolutionize financial reporting for this company, it needs to be fast, secure, and cost-effective.

But achieving that trifecta is a high hurdle.

Opsin's Approach to GenAI Innovation

The good news? Opsin solves the “chicken and egg” problem that AI leaders are facing.

Here’s how:

We secure applications from the ground up.

Opsin starts with security, embedding it deeply within the architecture of applications like the Financial Co-Pilot. This ensures robust protection from the outset, maintaining system security without compromising on performance. Our security orchestration ensures that the sensitive financial data and the level of access to it stays updated based on the organization’s security policies

Opsin’s platform allows financial analysts responsible for certain reports to access only the data they need for their tasks. Similarly, contractors from different subsidiaries are granted access solely to the information necessary for improving their reporting and operational work. This tailored access is continuously updated to align with the organization’s security policies--ensuring that data protection measures evolve with changing needs.

We enable fast-paced development and experimentation.

Opsin's approach accelerates development cycles, allowing for rapid iteration without the usual slowdown from compliance and security checks. For applications that need to stay ahead of market demands and technological advances, Opsin facilitates continuous synchronization and advanced access control mechanisms that ensure efficient real-time data handling—without creating bottlenecks.

We’re seamless and secure by design.

Opsin offers seamless integration, enabling you to begin interacting with your data in just a few clicks for a quick and easy start. Whether you prefer on-premise or cloud deployments, our flexible deployment options ensure that you can choose the best setup for your business needs.

We orchestrate performance by managing model integration and optimization across providers like OpenAI and Google. This means you always have access to the best tools without compromising the security and integrity of your data. Our comprehensive backend management handles all aspects of performance, cost, and security—allowing you to focus on achieving your GenAI goals.

We ensure transparency and compliance.

Opsin rigorously adheres to legal and privacy standards, implementing comprehensive auditing processes that track and log every interaction. This helps you stay within the stringent requirements of regulations like SOC 2, GDPR, and CCPA. Our platform is designed to scale with your operations, accommodating increased loads and complex data environments without performance degradation.

Benefits of a Trusted and Secure GenAI Environment

Imagine a more trusted and secure approach to innovation. Here are the key business benefits of that kind of environment:

- Accelerated innovation cycles: By simplifying the management of infrastructure, performance, and security, companies can focus on innovation.

- Lower operational costs and higher ROI: Removing the need to navigate complex security and legal issues reduces costs and allows teams to focus on core business goals—improving ROI.

- Enhanced Security and Trust: Starting with a secure foundation helps prevent data breaches and ensures compliance, which builds trust both within the organization and with clients. This approach also helps avoid costly penalties.

Conclusion

We've examined the "chicken and egg" problem inherent in adopting GenAI. But despite the hurdles of performance issues, high costs, and stringent security and compliance demands, the benefits of GenAI—such as improved operational efficiency and expanded capabilities across sectors—cannot be ignored.

Opsin's innovative approach provides a viable solution by prioritizing security, performance, and compliance from the ground up. This strategy allows for rapid development and deployment cycles, without compromising on security and performance. We encourage AI leaders to embrace this methodology for a seamless transition from experimentation to full-scale production.

With Opsin, you don't have to choose between the chicken or the egg—you get to enjoy both. More chicken, more eggs, please!

Overcoming the “Chicken and Egg” Problem in GenAI: A Path to Innovation with Security and Performance

As Heads of AI and VPs of Engineering explore generative AI (GenAI), they encounter a "chicken and egg" problem: the various benefits and capabilities of GenAI are juxtaposed against obstacles like performance limitations, high costs, stringent security requirements, complex legal frameworks, and rigorous compliance mandates.

With the adoption of GenAI, leaders face a fundamental question: Does the promise of GenAI outweigh the complexities of its implementation?

The Innovation Dilemma

Gen AI is one of the most exciting developments to emerge in the tech industry in the last few decades, because it has the potential to drastically shift how businesses operate. From operational efficiency to expansion capabilities, it’s going to change how we approach and solve problems across every sector.

Here are some examples that illustrate the transformative capabilities of GenAI:

| Application Area | How GenAI Transforms It | Business Impact |

|---|---|---|

| Customer Support | GenAI can analyze past interactions and customer preferences in real-time, personalizing interactions. |

• Reduces workload for support agents by hours • Increased sales thanks to personalized recommendations • Longtail increase of customer satisfaction and loyalty • Cuts operational costs |

| Predictive Maintenance | GenAI can predict equipment failures by analyzing sensor data, allowing proactive maintenance strategies. |

• Reduces downtime due to unexpected maintenance needs • Lowers maintenance costs • Extends equipment lifespan • Improves production efficiency and safety |

The competitive advantages are substantial. But with such promising innovation at our fingertips, why the dilemma?

Despite the capabilities of GenAI, there are three major challenges putting a pause on widespread adoption:

- Performance issues: Slow and inefficient performance can deter organizations from changing established workflows. Does the practicality of integrating new technologies outweigh the potential benefits?

- High costs: The investment required to implement GenAI makes the return on investment (ROI) uncertain, especially in scenarios where the end value is not clearly visible.

- Security and compliance risks: Stringent requirements and potential risks create barriers to experimentation and operational deployment, particularly with the need for secure and compliant data handling.

Case Study: Seeing it in Action

Let's take a look at a real-world example. Imagine a technology company wants to improve its financial reporting with a new application called the Financial Co-Pilot. This tool aims to reduce the time financial analysts spend on reports—from weeks to minutes—freeing up many hours for the analysts to work on more strategic asks that move the business forward.

Here are the questions that leaders might consider when weighing the investment.

- Performance: Does the app perform fast enough to meet analysts' expectations without causing delays or errors that could undermine trust in the tool?

- Accuracy: does the app help the analyst get meaningful data that is accurate and helpful to achieve their task faster?

- Return on investment: Does the business impact—such as speedier reporting and increased efficiency—justify the investment required for development and ongoing maintenance?

- Data security: Given the sensitivity of the financial data involved, can the company ensure robust security measures are in place to protect this data from potential leaks or breaches?

Now you see the "chicken and egg" problem in action. If the financial co-pilot application is to revolutionize financial reporting for this company, it needs to be fast, secure, and cost-effective.

But achieving that trifecta is a high hurdle.

Opsin's Approach to GenAI Innovation

The good news? Opsin solves the “chicken and egg” problem that AI leaders are facing.

Here’s how:

We secure applications from the ground up.

Opsin starts with security, embedding it deeply within the architecture of applications like the Financial Co-Pilot. This ensures robust protection from the outset, maintaining system security without compromising on performance. Our security orchestration ensures that the sensitive financial data and the level of access to it stays updated based on the organization’s security policies

Opsin’s platform allows financial analysts responsible for certain reports to access only the data they need for their tasks. Similarly, contractors from different subsidiaries are granted access solely to the information necessary for improving their reporting and operational work. This tailored access is continuously updated to align with the organization’s security policies--ensuring that data protection measures evolve with changing needs.

We enable fast-paced development and experimentation.

Opsin's approach accelerates development cycles, allowing for rapid iteration without the usual slowdown from compliance and security checks. For applications that need to stay ahead of market demands and technological advances, Opsin facilitates continuous synchronization and advanced access control mechanisms that ensure efficient real-time data handling—without creating bottlenecks.

We’re seamless and secure by design.

Opsin offers seamless integration, enabling you to begin interacting with your data in just a few clicks for a quick and easy start. Whether you prefer on-premise or cloud deployments, our flexible deployment options ensure that you can choose the best setup for your business needs.

We orchestrate performance by managing model integration and optimization across providers like OpenAI and Google. This means you always have access to the best tools without compromising the security and integrity of your data. Our comprehensive backend management handles all aspects of performance, cost, and security—allowing you to focus on achieving your GenAI goals.

We ensure transparency and compliance.

Opsin rigorously adheres to legal and privacy standards, implementing comprehensive auditing processes that track and log every interaction. This helps you stay within the stringent requirements of regulations like SOC 2, GDPR, and CCPA. Our platform is designed to scale with your operations, accommodating increased loads and complex data environments without performance degradation.

Benefits of a Trusted and Secure GenAI Environment

Imagine a more trusted and secure approach to innovation. Here are the key business benefits of that kind of environment:

- Accelerated innovation cycles: By simplifying the management of infrastructure, performance, and security, companies can focus on innovation.

- Lower operational costs and higher ROI: Removing the need to navigate complex security and legal issues reduces costs and allows teams to focus on core business goals—improving ROI.

- Enhanced Security and Trust: Starting with a secure foundation helps prevent data breaches and ensures compliance, which builds trust both within the organization and with clients. This approach also helps avoid costly penalties.

Conclusion

We've examined the "chicken and egg" problem inherent in adopting GenAI. But despite the hurdles of performance issues, high costs, and stringent security and compliance demands, the benefits of GenAI—such as improved operational efficiency and expanded capabilities across sectors—cannot be ignored.

Opsin's innovative approach provides a viable solution by prioritizing security, performance, and compliance from the ground up. This strategy allows for rapid development and deployment cycles, without compromising on security and performance. We encourage AI leaders to embrace this methodology for a seamless transition from experimentation to full-scale production.

With Opsin, you don't have to choose between the chicken or the egg—you get to enjoy both. More chicken, more eggs, please!